How to generate nicer sitemaps with the sitemap_generator gem

If you've ever set up a sitemap in Rails, you might have used the sitemap_generator gem (docs: /kjvarga/sitemap_generator) to automatically generate an XML sitemap.

On a recent client project we were generating a single sitemap for a variety of content types, including static pages, blog posts, and 2-3 custom content directories.

You can imagine a basic config/sitemap.rb setup like this from the sitemap_generator Readme:

SitemapGenerator::Sitemap.default_host = 'http://example.com'

SitemapGenerator::Sitemap.create do

add '/about', :changefreq => 'daily', :priority => 0.9

add '/contact_us', :changefreq => 'weekly'

Article.published.each do |article|

add article_path(article), priority: 0.64, changefreq: 'weekly'

end

end

SitemapGenerator::Sitemap.ping_search_engines # Not needed if you use the rake tasksI want to walk through a few changes I made to improve this sitemap and why.

Break Into Sitemap Index + Multiple Sitemaps

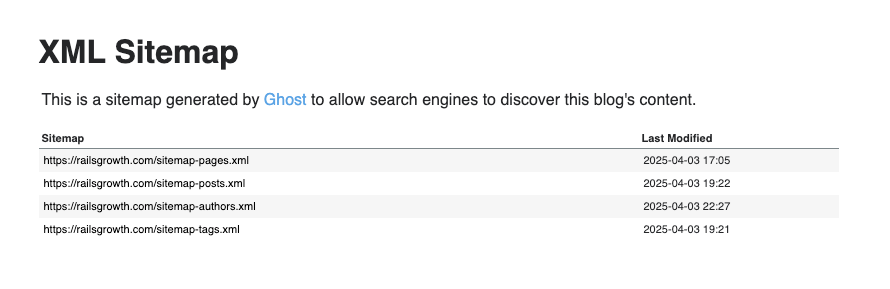

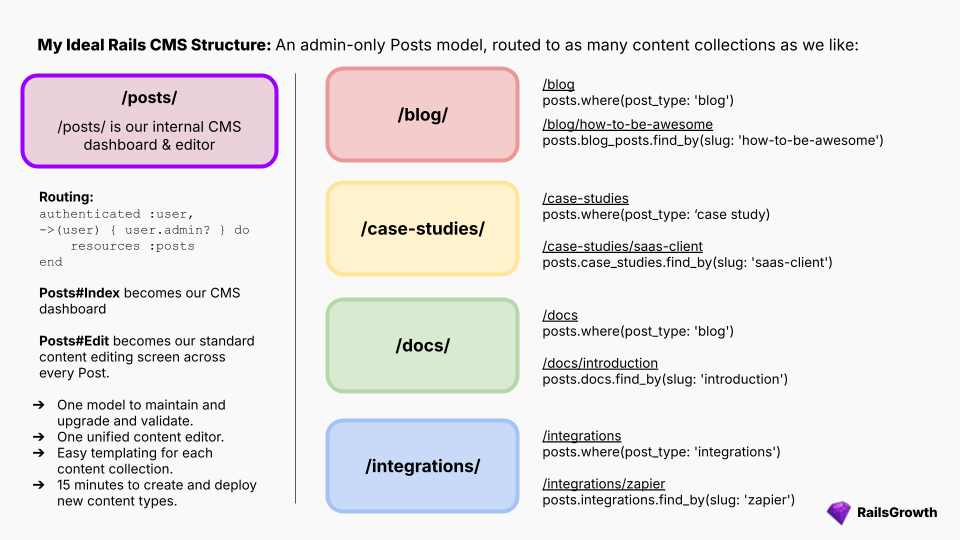

I prefer to generate multiple individual sitemaps for different content directories.

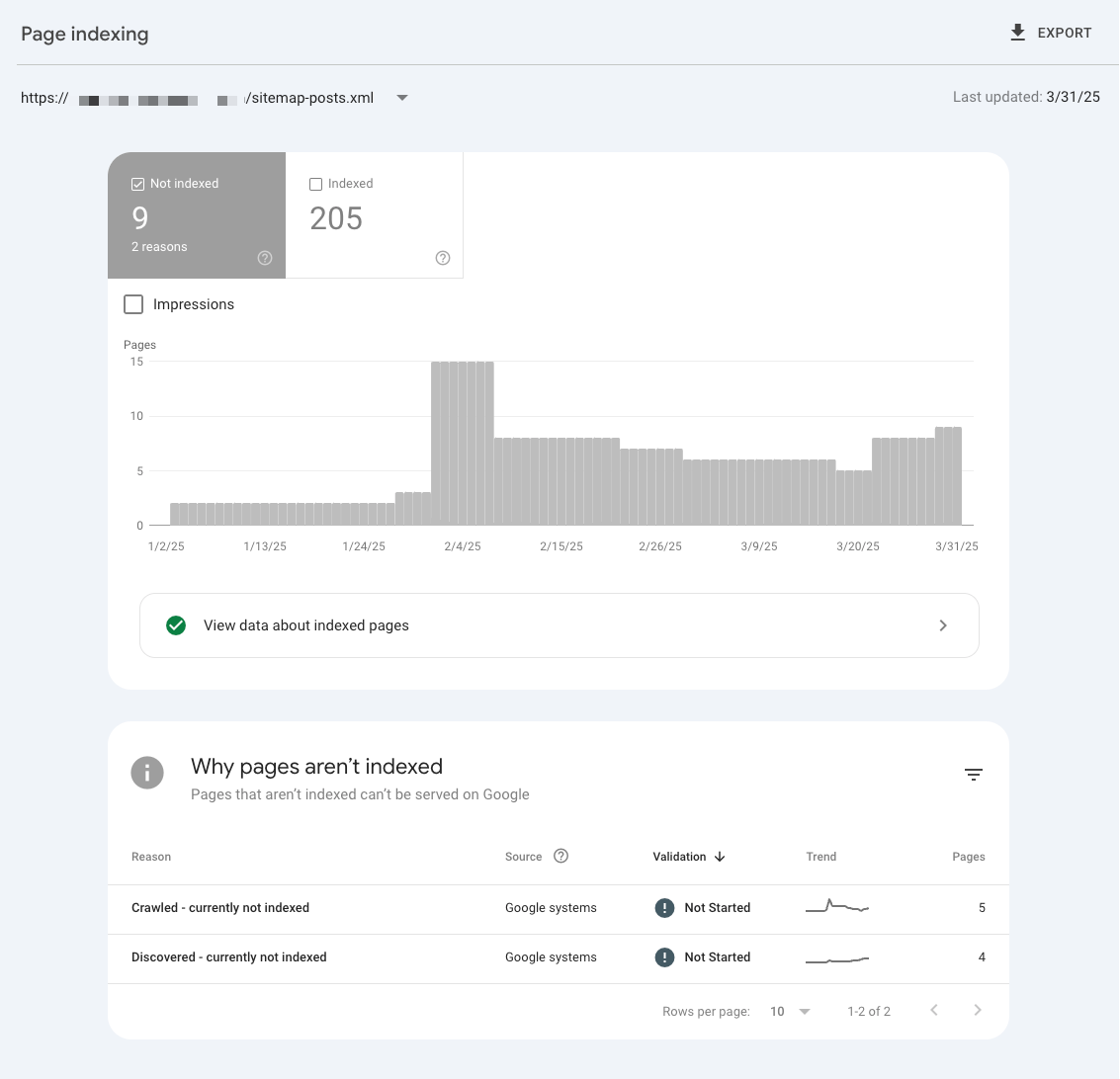

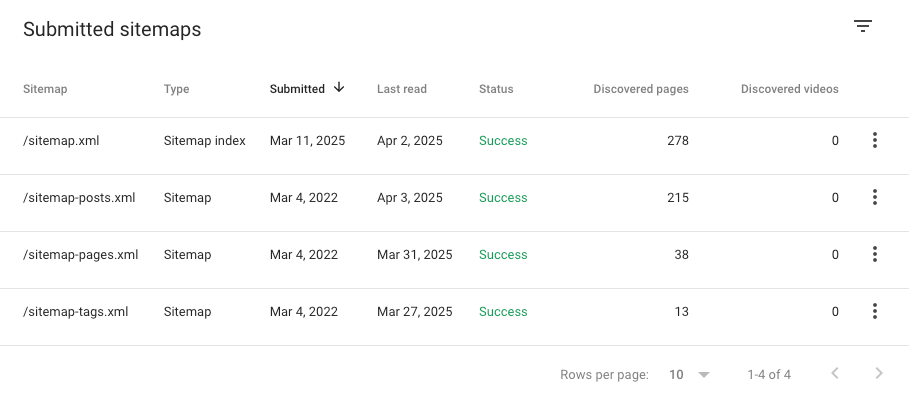

That's because when you submit those sitemaps to Google Search Console, it's much easier to analyze URL performance by content type:

Now, after submitting each of those sitemaps into Search Console, I can get more granular breakdowns of which content is being indexed and which isn't, which helps me make decisions around internal linking, identify why pages aren't ranking, etc.

Break Sitemap into separate files using group(filename: xyz)

Sitemap_generator has a nice built-in group feature to handle this:

# Set the root domain

SitemapGenerator::Sitemap.default_host = "https://www.example.com"

SitemapGenerator::Sitemap.create do

group(filename: :sitemap_pages) do

add '/about', :changefreq => 'daily', :priority => 0.9

add '/contact_us', :changefreq => 'weekly'

end

group(filename: :sitemap_articles) do

Article.published.each do |article|

add article_path(article), priority: 0.64, changefreq: 'weekly'

end

end

end

Add to root back to the pages group using into separate files using include_root: true

When you use the group feature, sitemap_generator stops adding your homepage to a sitemap. You can add it back using include_root: true in the pages group we established above:

group(filename: :pages, include_root: true) do

add '/about', :changefreq => 'daily', :priority => 0.9

add '/contact_us', :changefreq => 'weekly'

endSet create_index = true to generate a sitemap_index file

Our next step is to tell sitemap_generator to build a sitemap_index file. A sitemap_index file is simply an index of all of your grouped sitemaps.

It is a nice feature because we can add a single sitemap reference to robots.txt and bots will find all of the sitemaps we might generate in the future:

# robots.txt

Sitemap: https://www.example.com/sitemaps/sitemap_index.xml.gzNo matter how many new groups we add to our config/sitemap.rb, they'll automatically be referenced by this robots.txt entry. For big sites that is huge because it will automatically include sitemaps that get paginated or broken up after exceeding the maximum URL count per sitemap file.

We can generate that using the following before our create command:

# Create a sitemap index which points to all sitemap files

SitemapGenerator::Sitemap.create_index = trueMany CMSs or SEO plugins will name this file sitemap_index.xml by default. Sitemap_generator doesn't do that, it just names the file sitemap.xml, so if we'd like, we can override the index filename in the create command to make the filename more explicit.

# Set the root domain

SitemapGenerator::Sitemap.default_host = "https://www.example.com"

# Create a sitemap index which points to all sitemap files

SitemapGenerator::Sitemap.create_index = true

SitemapGenerator::Sitemap.create(filename: 'sitemap_index') do

group(filename: :sitemap_pages, include_root: true) do

add '/about', :changefreq => 'daily', :priority => 0.9

add '/contact_us', :changefreq => 'weekly'

end

group(filename: :sitemap_articles) do

Article.published.each do |article|

add article_path(article), priority: 0.64, changefreq: 'weekly'

end

end

end

Our current sitemap, which outputs a renamed sitemap_index.xml.gz file, sitemap_pages.xml.gz, and sitemap_articles.xml.gz

Generate both .xml and .xml.gz sitemap versions

By default, sitemap_generator will generate compressed sitemaps, meaning a gzipped .xml.gz file.

Those are smaller in filesize and better for serving to bots as well as for submitting to Google Search Console.

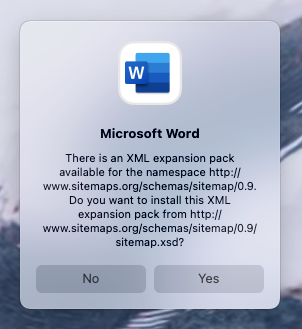

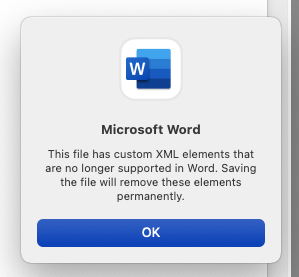

But - they don't render in the browser, they download when you load them. And I don't know about you, but Microsoft Word is really excited to be the default app on my Mac when I unzip them and try to load the .xml file locally.

The fun journey every single time Word opens up an XML file. I should probably set a different default app for those files.

So instead, it's nice to generate an uncompressed .xml file as well which can be viewed in the browser. This makes it much easier for developers to load the .xml version in local, staging, and production to see which how URLs are being loaded in each sitemap file.

Thankfully, we can do this pretty easily by calling the :compress option, and running SitemapGenerator::Sitemap.create twice:

# Set the root domain

SitemapGenerator::Sitemap.default_host = "https://www.example.com"

# Create a sitemap index which points to all sitemap files

SitemapGenerator::Sitemap.create_index = true

# Generate both compressed and uncompressed versions

[true, false].each do |compress_value|

SitemapGenerator::Sitemap.compress = compress_value

SitemapGenerator::Sitemap.create(filename: 'sitemap_index') do

group(filename: :sitemap_pages, include_root: true) do

add '/about', :changefreq => 'daily', :priority => 0.9

add '/contact_us', :changefreq => 'weekly'

end

group(filename: :sitemap_articles) do

Article.published.each do |article|

add article_path(article), priority: 0.64, changefreq: 'weekly'

end

end

end

endThis change allows us to generate both .xml and .xml.gz sitemaps.

In this example we're iterating through the [true, false].each do |compress_value| array, using it to set SitemapGenerator::Sitemap.compress = compress_value before running .create, which ends up generating .xml and .xml.gz copies of every sitemap file.

Here's an example of what our output looks like for a site with 4 groups, a sitemap index, and both .xml and .xml.gz output:

In '/Users/kanejamison/Github/ltbweb/public/':

+ sitemap_pages.xml.gz 26 links / 591 Bytes

+ sitemap_blog.xml.gz 22 links / 911 Bytes

+ sitemap_resources.xml.gz 2713 links / 40.2 KB

+ sitemap_services.xml.gz 19 links / 453 Bytes

+ sitemap_sitemap_index.xml.gz 4 sitemaps / 263 Bytes

Sitemap stats: 2,780 links / 4 sitemaps / 0m00s

In '/Users/kanejamison/Github/ltbweb/public/':

+ sitemap_pages.xml 26 links / 4.71 KB

+ sitemap_blog.xml 22 links / 5.14 KB

+ sitemap_resources.xml 2713 links / 510 KB

+ sitemap_services.xml 19 links / 3.97 KB

+ sitemap_sitemap_index.xml 4 sitemaps / 776 Bytes

Sitemap stats: 2,780 links / 4 sitemaps / 0m00sTake note at the filesize difference on the gzipped versions. It's certainly nice to point bots to those versions, especially with how much AI bot activity is happening nowadays.

If you're on Heroku, you're not done.

This part gets a little confusing.

If you're on a host that allows you to build static xml files dynamically on the server, you might be good to go. You'll need to set up a cron job for rake sitemap:refresh, after which, you'll have a fresh sitemap however often you like.

But if you're on Heroku, you can't build static assets on the server and keep them there. If I understand correctly they'll let you build the files in /tmp/, but you can't just drop them in /public/, you'll need to set up a storage adapter, which is heavily documented on sitemap_generator's docs.

Sometimes it is desirable to host your sitemap files on a remote server, and point robots and search engines to the remote files. For example, if you are using a host like Heroku, which doesn't allow writing to the local filesystem. You still require some write access, because the sitemap files need to be written out before uploading. So generally a host will give you write access to a temporary directory. On Heroku this is tmp/ within your application directory.

When you switch to using a storage adapter, then a lot of our setup above becomes a problem.

As sitemap_generator explains in their section on storage adapters:

Note that SitemapGenerator will automatically turn off include_index in this case because the sitemaps_host does not match the default_host. The link to the sitemap index file that would otherwise be included would point to a different host than the rest of the links in the sitemap, something that the sitemap rules forbid.

So, that's a problem - if we can't store our sitemap on the server, and we can't generate a sitemap index, then we're left with a couple of options.

One is that we can hardcode our sitemap file references in robots.txt. On a smaller site that might be feasible, but if we're creating multiple sitemaps of a certain type, eg sitemap_posts.xml, sitemap_posts2.xml, then it would require us to manually keep track of how many sitemaps are getting created and update that robots.txt entry manually.

[post in progress until this note is gone, still finishing this end section]

STILL TO COVER:

- REVERSE PROXY / NGINX to serve files from our domain URLs.

- Or, whether redirects will work.

- Whether we can override the sitemap_index disabled feature if we're willing to handle the URL controls in one of those ways.

RailsGrowth

RailsGrowth

![A Custom Outdated Gems Script to Speed Up Rails Dependency Upgrades [+ Video]](/content/images/size/w750/2025/07/outdated-gems-audit-example.png)

Comments